Gatekeeping AI Security: Lessons from DEFCON and the Copilot Hack

At DEFCON, researchers tore through Microsoft’s Copilot Studio agents with a series of prompt injection attacks.

As security researcher Michael Bargury reported on X, the exploit chain was shockingly simple — and the fallout was severe:

- Customer CRM records exposed

- Private tools revealed

- Unauthorized actions executed automatically, without human oversight

In other words: a handful of prompts turned “enterprise copilots” into data exfiltration machines.

Why this matters

For years, AI has been marketed on speed and autonomy. “No human in the loop” is framed as a feature. But in practice, it’s a hacker’s dream.

When an agent has access to sensitive business data and workflows, one overlooked vulnerability doesn’t just leak a response — it compromises entire systems. At DEFCON, that meant Salesforce records, billing info, and internal comms. Tomorrow, it could mean medical records or financial transactions.

This wasn’t a theoretical lab demo. It was a real-world stress test of what happens when capability outpaces security.

The gatekeeping problem

The uncomfortable truth is that most serious AI security work happens behind closed doors.

- Vendors run internal red-teams but rarely publish results.

- Frontier labs test their models but share little with the broader ecosystem.

- Researchers face barriers to reproducing or disclosing vulnerabilities.

That leaves startups, enterprises, and developers in the dark — adopting systems without clear visibility into their risks. Trust becomes a leap of faith.

Here’s the problem: security findings are treated as proprietary IP, while attackers share techniques openly. The imbalance only benefits one side.

Why openness is essential

Other industries learned this lesson the hard way. In cybersecurity, we have:

- Public CVE databases

- Coordinated vulnerability disclosure

- Bug bounty platforms

These aren’t nice-to-haves. They’re the infrastructure of trust.

AI needs its equivalent. Without it:

- Enterprises can’t evaluate model providers

- Regulators can’t enforce meaningful standards

- Developers can’t improve on a broken foundation

Our stance

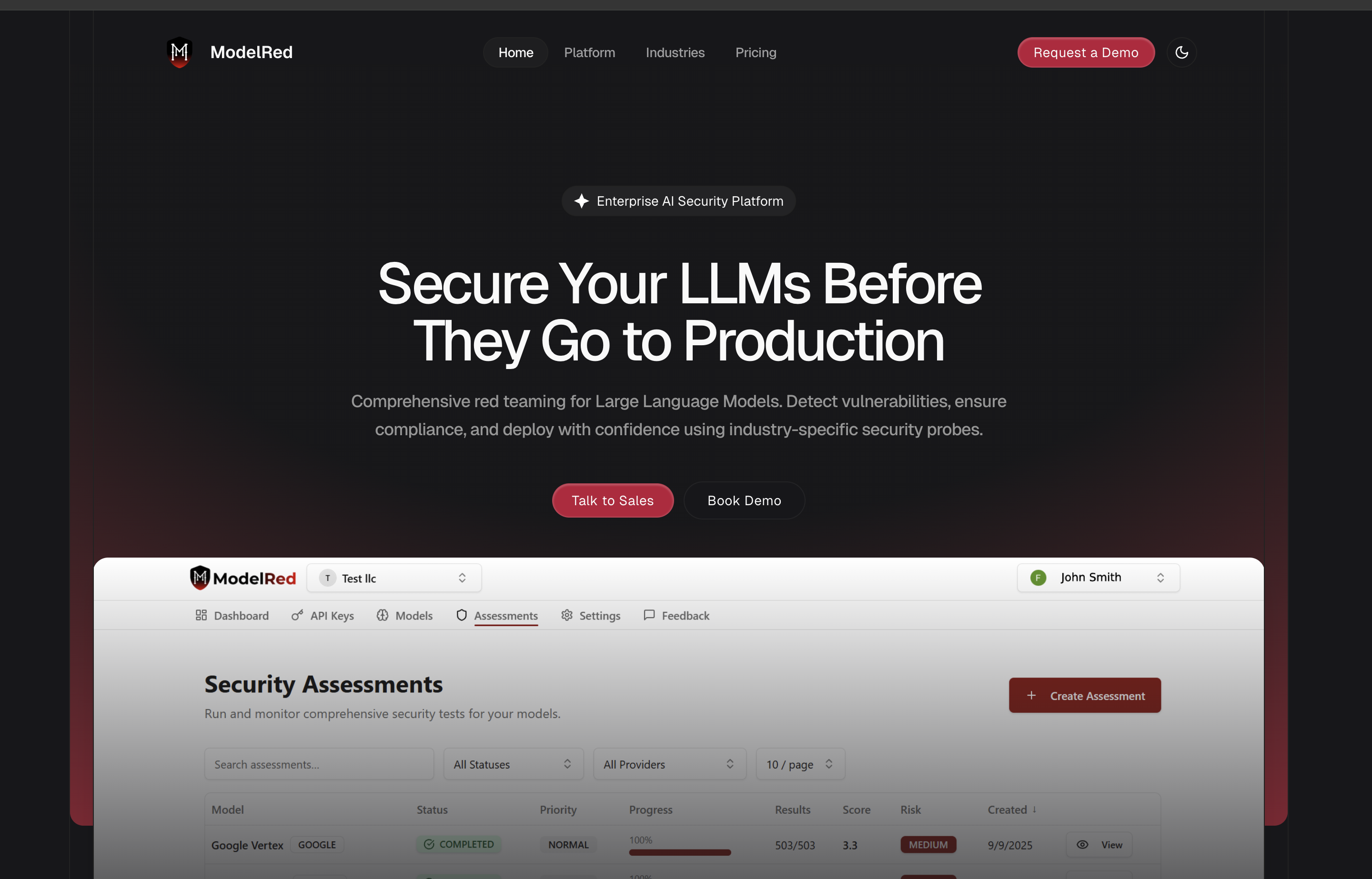

At ModelRed, we believe AI security can’t stay locked away. That’s why we:

- Build and publish transparent ModelRed Scores

- Run both general adversarial probes (prompt injections, data leakage, jailbreaks) and domain-specific scenarios (finance, healthcare, legal, education)

- Maintain a public leaderboard tracking how the most widely used models perform over time

Our belief: Security shouldn’t be a private advantage for a few. It should be a shared baseline for everyone adopting AI.

The bigger picture

The DEFCON Copilot hack wasn’t just a one-off embarrassment for Microsoft. It was a warning shot for the entire industry.

If AI security knowledge stays siloed, we will repeat the same cycle: vendors overpromise, attackers exploit, enterprises pay the price.

The future of AI won’t be defined by who ships the biggest model. It will be defined by who earns trust. And trust only comes with transparency.

Want to see how your own models hold up under stress? [Request a demo].